NHTSA introduced that it has launched an investigation into Tesla for not accurately reporting crashes involving its Autopilot and Full Self-Driving techniques.

The Nationwide Freeway Site visitors Security Administration (NHTSA), the highway security regulator within the US, already has a number of open investigations into Tesla, most of that are associated to Tesla’s superior driver help techniques (ADAS): Autopilot and Full Self-Driving (FSD).

Now, it’s opening a brand new investigation associated to inconsistencies in how Tesla experiences crashes involving its ADAS techniques.

As a result of Standing Normal Order 2021-01 (the “SGO”), automakers are required to report back to NHTSA crashes involving their autonomous driving and superior driver help techniques inside 5 days of being notified of them.

Relating to Tesla, it usually receives notification inside minutes of a crash, because it has an automatic collision snapshot that’s despatched to its mothership server following an accident.

Now, NHTSA claims that Tesla has generally waited months to report crashes involving Autopilot and Full Self-Driving.

They wrote of their discover that they opened a brand new probe into Tesla:

The Workplace of Defects Investigation (“ODI”) has recognized quite a few incident experiences submitted by Tesla, Inc. (“Tesla”) in response to Standing Normal Order 2021-01 (the “SGO”), wherein the reported crashes occurred a number of months or extra earlier than the dates of the experiences. The vast majority of these experiences concerned crashes wherein the Standing Normal Order in place on the time required a report back to be submitted inside one or 5 days of Tesla receiving discover of the crash. When the experiences have been submitted, Tesla submitted them in certainly one of two methods. Lots of the experiences have been submitted as a part of a single batch, whereas others have been submitted on a rolling foundation.

Tesla informed NHTSA that this was resulting from an “error” of their techniques, they usually declare to have fastened it, however the company needs to research additional:

Preliminary engagement between ODI and Tesla on the difficulty signifies that the timing of the experiences was resulting from a difficulty with Tesla’s information assortment, which, in accordance with Tesla, has now been fastened. NHTSA is opening this Audit Question, a typical course of for reviewing compliance with authorized necessities, to guage the reason for the potential delays in reporting, the scope of any such delays, and the mitigations that Tesla has developed to handle them. As a part of this evaluation, NHTSA will assess whether or not any experiences of prior incidents stay excellent and whether or not the experiences that have been submitted embrace all the required and accessible information.

It’s not shocking to see the regulator being suspicious about Tesla’s excuse, following our report that Tesla lied and misled police and plaintiffs to cover its Autopilot crash information in a latest wrongful demise case that the automaker misplaced in trial.

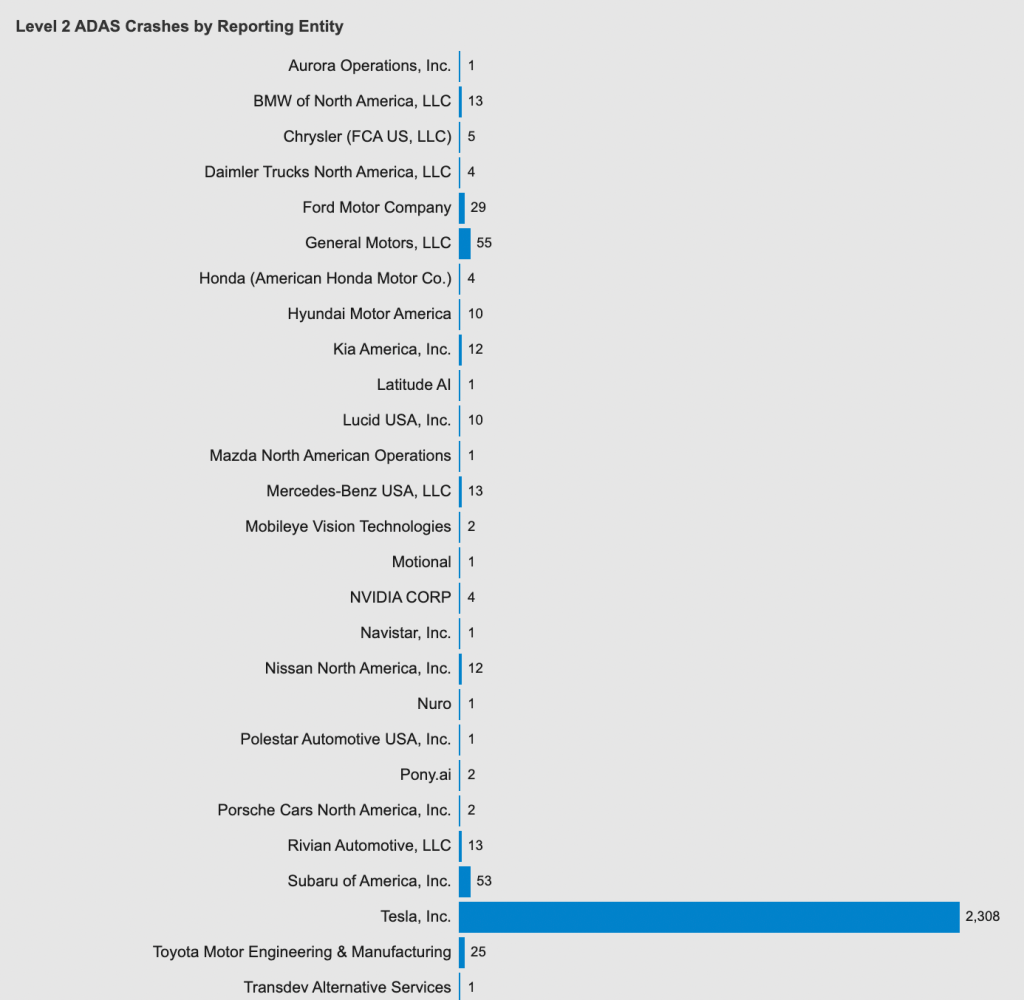

Tesla leads stage 2 ADAS system crash information reporting by a mile (ADAS stage 2 on the left and ADS stage 3-5 on the fitting):

Tesla solely seems on the chart for the extent 2 driver help system and never on the crash reporting for the automated system, since, regardless of what its CEO and a few shareholders declare, Tesla doesn’t have any system deployed within the US that qualifies as absolutely automated.

Nevertheless, in terms of stage 2 ADAS crash reporting, Tesla leads with over 2,300 crashes, adopted by GM, which experiences 55 crashes with its SuperCruise system.

It’s not the primary time that Tesla has had points with NHTSA’s crash reporting. We beforehand reported that Tesla abuses NHTSA’s confidential insurance policies to have a lot of the information associated to the crashes redacted, and the automaker claimed that it might ‘endure monetary hurt’ if its self-driving crash information became public.

Electrek’s Take

It definitely wouldn’t be the primary time that Tesla tries to weasle its manner out of reporting crash information associated to its automated driving efforts.

At this level, it’s principally its modus operandi.

But, we’re alleged to belief the corporate to deploy secure techniques that automate driving?

Tesla has confirmed extraordinarily opaque and untrustworthy in its security reporting relating to Autopilot and Full Self-Driving. I feel that’s a good assertion backed by details.

That’s not what you need from an organization deploying merchandise which can be doubtlessly harmful to highway makes use of.